How to use a pre-defined Tensorflow Dataset?

Tensorflow 2.0 comes with a set of pre-defined ready to use datasets. It is quite easy to use and is often handy when you are just playing around with new models.

In this short post, I will show you how you can use a pre-defined Tensorflow Dataset.

Prerequisite

Make sure that you have tensorflow and tensorflow-datasets installed.

pip install -q tensorflow-datasets tensorflow

Using a Tensorflow dataset

In this example, we will use a small imagenette dataset.

imagenette | TensorFlow Datasets

You can visit this link to get a complete list of available datasets.

Load the dataset

We will use the tfds.builder function to load the dataset.

import tensorflow_datasets as tfds

imagenette_builder = tfds.builder("imagenette/full-size")

imagenette_info = imagenette_builder.info

imagenette_builder.download_and_prepare()

datasets = imagenette.as_dataset(as_supervised=True)

Note:

- we are setting

as_supervisedastrueso that we can perform some manipulations on the data. - we are creating an

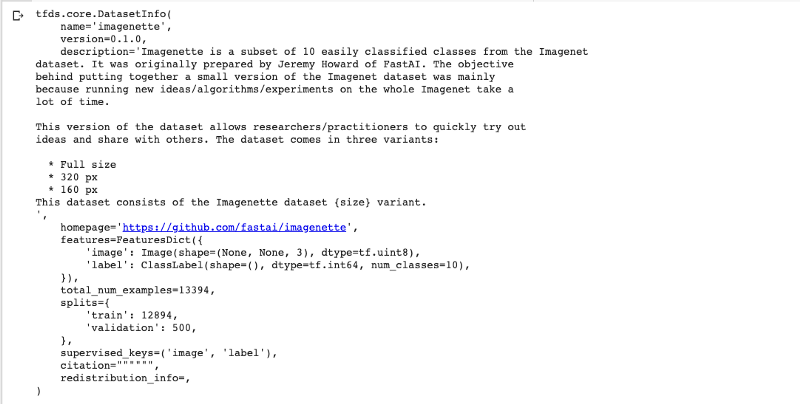

imagenette_infoobject that contains the information about the dataset. It prints something like this:

Get split size

We can get the size of the train and validation set using the imagenette_info object.

train_examples = imagenette_info.splits['train'].num_examples

validation_examples = imagenette_info.splits['validation'].num_examples

This would be useful while defining the steps_per_epoch and validation_steps of the model.

Create batches

Next, we will create batches so that the data is easily trainable. On low RAM devices or for large datasets it is usually not possible to load the whole dataset in memory at once.

train, test = datasets['train'], datasets['validation']

train_batch = train.map(

lambda image, label: (tf.image.resize(image, (448, 448)), label)).shuffle(100).batch(batch_size).repeat()

validation_batch = test.map(

lambda image, label: (tf.image.resize(image, (448, 448)), label)

).shuffle(100).batch(batch_size).repeat()

Note: We are taking the train and validation splits and resizing all images to 448 x 448 . You can perform any other manipulation too using the map function. It is useful to resize or normalize the image or perform any other preprocessing step.

That’s it. You can now use this data for your model. Here’s the link to the Google Colab with the complete code.

Written on June 18, 2020 by Vivek Maskara.

Originally published on Medium